Computational Physics Basics: Floating Point Numbers

Posted 25th May 2021 by Holger

In a previous contribution, I have shown you that computers are naturally suited to store finite length integer numbers. Most quantities in physics, on the other hand, are real numbers. Computers can store real numbers only with finite precision. Like storing integers, each representation of a real number is stored in a finite number of bits. Two aspects need to be considered. The precision of the stored number is the number of significant decimal places that can be represented. Higher precision means that the error of the representation can be made smaller. Bur precision is not the only aspect that needs consideration. Often, physical quantities can be very large or very small. The electron charge in SI units, for example, is roughly $1.602\times10^{-19}$C. Using a fixed point decimal format to represent this number would require a large number of unnecessary zeros to be stored. Therefore, the range of numbers that can be represented is also important.

In the decimal system, we already have a notation that can capture very large and very small numbers and I have used it to write down the electron charge in the example above. The scientific notation writes a number as a product of a mantissa and a power of 10. The value of the electron charge (without units) is written as

$$1.602\times10^{-19}.$$

Here 1.602 is the mantissa (or the significand) and -19 is the exponent. The general form is

$$m\times 10^n.$$

The mantissa, $m$, will always be between 1 and 10 and the exponent, $n$, has to be chosen accordingly. This format can straight away be translated into the binary system. Here, any number can be written as

$$m\times2^n,$$

with $1\le m<2$. Both $m$ and $n$ can be stored in binary form.

Memory layout of floating-point numbers, the IEEE 754 standard

In most modern computers, numbers are stored using 64 bits but some architectures, like smartphones, might only use 32 bits. For a given number of bits, a decision has to be made on how many bits should be used to store the mantissa and how many bits should be used for the exponent. The IEEE 754 standard sets out the memory layout for various size floating-point representations and almost all hardware supports these specifications. The following table shows the number of bits for mantissa and exponent for some IEEE 754 number formats.

| Bits | Name | Sign bit | Mantissa bits, m | Exponent bits, p | Exponent bias | Decimal digits |

|---|---|---|---|---|---|---|

| 16 | half-precision | 1 | 10 | 5 | 15 | 3.31 |

| 32 | single precision | 1 | 23 | 8 | 127 | 7.22 |

| 64 | double precision | 1 | 52 | 11 | 1023 | 15.95 |

| 128 | quadruple precision | 1 | 112 | 15 | 16383 | 34.02 |

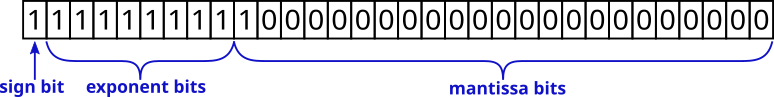

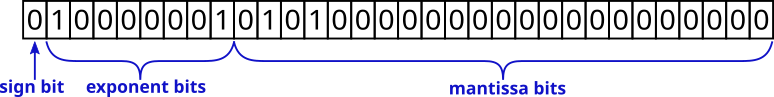

The layout of the bits is as follows. The first, most significant bit represents the sign of the number. A 0 indicates a positive number and a 1 indicates a negative number. The next $p$ bits store the exponent. The exponent is not stored as a signed integer, but as an unsigned integer with offset. This offset, or bias, is chosen to be $2^p – 1$ so that a leading zero followed by all ones corresponds to an exponent of 0.

The remaining bits store the mantissa. The mantissa is always between 1 and less than 2. This means that, in binary, the leading bit is always equal to one and doesn’t need to be stored. The $m$ bits, therefore, only store the fractional part of the mantissa. This allows for one extra bit to improve the precision of the number.

Example

The number 5.25 represented by a 32-bit floating-point. In binary, the number is $1.0101\times2^2$. The fractional part of the mantissa is stored in the mantissa bits. The exponent is $127+2$.

Infinity and NaN

The IEEE 754 standard defines special numbers that represent infinity and the not-a-number state. Infinity is used to show that a result of a computation has exceeded the allowed range. It can also result from a division by zero. Infinity is represented by the maximum exponent, i.e. all $p$ bits of the exponent are set to 1. In addition, the $m$ bits of the mantissa are set to 0. The sign bit is still used for infinity. This means it is possible to store a +Inf and a -Inf value.

Example

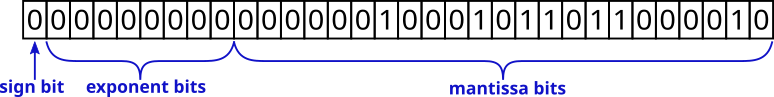

The special state NaN is used to store results that are not defined or can’t otherwise be represented. For example, the operation $\sqrt{-1}$ will result in a not-a-number state. Similar to infinity, it is represented by setting the $p$ exponent bits to 1. To distinguish it from infinity, the mantissa can have any non-zero value.

Subnormal Numbers

As stated above, all numbers in the regular range will be represented by a mantissa between 1 and 2 so that the leading bit is always 1. Numbers very close to zero will have a small exponent value. Once the exponent is exactly zero, it is better to explicitly store all bits of the mantissa and allow the first bit to be zero. This allows even smaller numbers to be represented than would otherwise be possible. Extending the range in this way comes at the cost of reduced precision of the stored number.

Example

Floating Point Numbers in Python, C++, and JavaScript

Both Python and JavaScript exclusively store floating-point numbers using 64-bit precision. In fact, in JavaScript, all numbers are stored as 64-bit floating-point, even integers. This is the reason for the fact that integers in JavaScript only have 53 bits. They are stored in the mantissa of the 64-bit floating-point number.

C++ offers a choice of different precisions

| Type | Alternative Name | Number of Bits |

|---|---|---|

float |

single precision | usually 32 bits |

double |

double precision | usually 64 bits |

long double |

extended precision | architecture-dependent, not IEEE 754, usually 80 bits |

Leave a Reply