Computational Physics Basics: Floating Point Numbers

Posted 25th May 2021 by Holger Schmitz

In a previous contribution, I have shown you that computers are naturally suited to store finite length integer numbers. Most quantities in physics, on the other hand, are real numbers. Computers can store real numbers only with finite precision. Like storing integers, each representation of a real number is stored in a finite number of bits. Two aspects need to be considered. The precision of the stored number is the number of significant decimal places that can be represented. Higher precision means that the error of the representation can be made smaller. Bur precision is not the only aspect that needs consideration. Often, physical quantities can be very large or very small. The electron charge in SI units, for example, is roughly $1.602\times10^{-19}$C. Using a fixed point decimal format to represent this number would require a large number of unnecessary zeros to be stored. Therefore, the range of numbers that can be represented is also important.

In the decimal system, we already have a notation that can capture very large and very small numbers and I have used it to write down the electron charge in the example above. The scientific notation writes a number as a product of a mantissa and a power of 10. The value of the electron charge (without units) is written as

$$1.602\times10^{-19}.$$

Here 1.602 is the mantissa (or the significand) and -19 is the exponent. The general form is

$$m\times 10^n.$$

The mantissa, $m$, will always be between 1 and 10 and the exponent, $n$, has to be chosen accordingly. This format can straight away be translated into the binary system. Here, any number can be written as

$$m\times2^n,$$

with $1\le m<2$. Both $m$ and $n$ can be stored in binary form.

Memory layout of floating-point numbers, the IEEE 754 standard

In most modern computers, numbers are stored using 64 bits but some architectures, like smartphones, might only use 32 bits. For a given number of bits, a decision has to be made on how many bits should be used to store the mantissa and how many bits should be used for the exponent. The IEEE 754 standard sets out the memory layout for various size floating-point representations and almost all hardware supports these specifications. The following table shows the number of bits for mantissa and exponent for some IEEE 754 number formats.

| Bits | Name | Sign bit | Mantissa bits, m | Exponent bits, p | Exponent bias | Decimal digits |

|---|---|---|---|---|---|---|

| 16 | half-precision | 1 | 10 | 5 | 15 | 3.31 |

| 32 | single precision | 1 | 23 | 8 | 127 | 7.22 |

| 64 | double precision | 1 | 52 | 11 | 1023 | 15.95 |

| 128 | quadruple precision | 1 | 112 | 15 | 16383 | 34.02 |

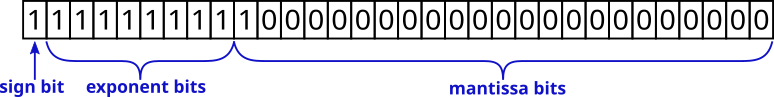

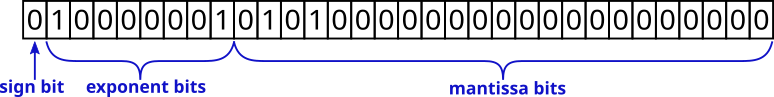

The layout of the bits is as follows. The first, most significant bit represents the sign of the number. A 0 indicates a positive number and a 1 indicates a negative number. The next $p$ bits store the exponent. The exponent is not stored as a signed integer, but as an unsigned integer with offset. This offset, or bias, is chosen to be $2^p – 1$ so that a leading zero followed by all ones corresponds to an exponent of 0.

The remaining bits store the mantissa. The mantissa is always between 1 and less than 2. This means that, in binary, the leading bit is always equal to one and doesn’t need to be stored. The $m$ bits, therefore, only store the fractional part of the mantissa. This allows for one extra bit to improve the precision of the number.

Example

The number 5.25 represented by a 32-bit floating-point. In binary, the number is $1.0101\times2^2$. The fractional part of the mantissa is stored in the mantissa bits. The exponent is $127+2$.

Infinity and NaN

The IEEE 754 standard defines special numbers that represent infinity and the not-a-number state. Infinity is used to show that a result of a computation has exceeded the allowed range. It can also result from a division by zero. Infinity is represented by the maximum exponent, i.e. all $p$ bits of the exponent are set to 1. In addition, the $m$ bits of the mantissa are set to 0. The sign bit is still used for infinity. This means it is possible to store a +Inf and a -Inf value.

Example

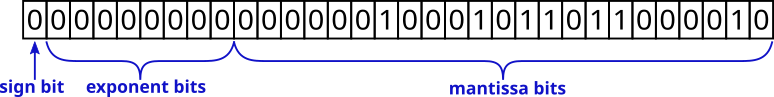

The special state NaN is used to store results that are not defined or can’t otherwise be represented. For example, the operation $\sqrt{-1}$ will result in a not-a-number state. Similar to infinity, it is represented by setting the $p$ exponent bits to 1. To distinguish it from infinity, the mantissa can have any non-zero value.

Subnormal Numbers

As stated above, all numbers in the regular range will be represented by a mantissa between 1 and 2 so that the leading bit is always 1. Numbers very close to zero will have a small exponent value. Once the exponent is exactly zero, it is better to explicitly store all bits of the mantissa and allow the first bit to be zero. This allows even smaller numbers to be represented than would otherwise be possible. Extending the range in this way comes at the cost of reduced precision of the stored number.

Example

Floating Point Numbers in Python, C++, and JavaScript

Both Python and JavaScript exclusively store floating-point numbers using 64-bit precision. In fact, in JavaScript, all numbers are stored as 64-bit floating-point, even integers. This is the reason for the fact that integers in JavaScript only have 53 bits. They are stored in the mantissa of the 64-bit floating-point number.

C++ offers a choice of different precisions

| Type | Alternative Name | Number of Bits |

|---|---|---|

float |

single precision | usually 32 bits |

double |

double precision | usually 64 bits |

long double |

extended precision | architecture-dependent, not IEEE 754, usually 80 bits |

Computational Physics Basics: Integers in C++, Python, and JavaScript

Posted 5th August 2020 by Holger Schmitz

In a previous post, I wrote about the way that the computer stores and processes integers. This description referred to the basic architecture of the processor. In this post, I want to talk about how different programming languages present integers to the developer. Programming languages add a layer of abstraction and in different languages that abstraction may be less or more pronounced. The languages I will be considering here are C++, Python, and JavaScript.

Integers in C++

C++ is a language that is very close to the machine architecture compared to other, more modern languages. The data that C++ operates on is stored in the machine’s memory and C++ has direct access to this memory. This means that the C++ integer types are exact representations of the integer types determined by the processor architecture.

The following integer datatypes exist in C++

| Type | Alternative Names | Number of Bits | G++ on Intel 64 bit (default) |

|---|---|---|---|

char |

at least 8 | 8 | |

short int |

short |

at least 16 | 16 |

int |

at least 16 | 32 | |

long int |

long |

at least 32 | 64 |

long long int |

long long |

at least 64 | 64 |

This table does not give the exact size of the datatypes because the C++ standard does not specify the sizes but only lower limits. It is also required that the larger types must not use fewer bits than the smaller types. The exact number of bits used is up to the compiler and may also be changed by compiler options. To find out more about the regular integer types you can look at this reference page.

The reason for not specifying exact sizes for datatypes is the fact that C++ code will be compiled down to machine code. If you compile your code on a 16 bit processor the plain int type will naturally be limited to 16 bits. On a 64 bit processor on the other hand, it would not make sense to have this limitation.

Each of these datatypes is signed by default. It is possible to add the signed qualifier before the type name to make it clear that a signed type is being used. The unsigned qualifier creates an unsigned variant of any of the types. Here are some examples of variable declarations.

char c; // typically 8 bit unsigned int i = 42; // an unsigned integer initialised to 42 signed long l; // the same as "long l" or "long int l"

As stated above, the C++ standard does not specify the exact size of the integer types. This can cause bugs when developing code that should be run on different architectures or compiled with different compilers. To overcome these problems, the C++ standard library defines a number of integer types that have a guaranteed size. The table below gives an overview of these types.

| Signed Type | Unsigned Type | Number of Bits |

|---|---|---|

int8_t |

uint8_t |

8 |

int16_t |

uint16_t |

16 |

int32_t |

uint32_t |

32 |

int64_t |

uint64_t |

64 |

More details on these and similar types can be found here.

The code below prints a 64 bit int64_t using the binary notation. As the name suggests, the bitset class interprets the memory of the data passed to it as a bitset. The bitset can be written into an output stream and will show up as binary data.

#include <bitset> void printBinaryLong(int64_t num) { std::cout << std::bitset<64>(num) << std::endl; }

Integers in Python

Unlike C++, Python hides the underlying architecture of the machine. In order to discuss integers in Python, we first have to make clear which version of Python we are talking about. Python 2 and Python 3 handle integers in a different way. The Python interpreter itself is written in C which can be regarded in many ways as a subset of C++. In Python 2, the integer type was a direct reflection of the long int type in C. This meant that integers could be either 32 or 64 bit, depending on which machine a program was running on.

This machine dependence was considered bad design and was replaced be a more machine independent datatype in Python 3. Python 3 integers are quite complex data structures that allow storage of arbitrary size numbers but also contain optimizations for smaller numbers.

It is not strictly necessary to understand how Python 3 integers are stored internally to work with Python but in some cases it can be useful to have knowledge about the underlying complexities that are involved. For a small range of integers, ranging from -5 to 256, integer objects are pre-allocated. This means that, an assignment such as

n = 25

will not create the number 25 in memory. Instead, the variable n is made to reference a pre-allocated piece of memory that already contained the number 25. Consider now a statement that might appear at some other place in the program.

a = 12 b = a + 13

The value of b is clearly 25 but this number is not stored separately. After these lines b will reference the exact same memory address that n was referencing earlier. For numbers outside this range, Python 3 will allocate memory for each integer variable separately.

Larger integers are stored in arbitrary length arrays of the C int type. This type can be either 16 or 32 bits long but Python only uses either 15 or 30 bits of each of these "digits". In the following, 32 bit ints are assumed but everything can be easily translated to 16 bit.

Numbers between −(230 − 1) and 230 − 1 are stored in a single int. Negative numbers are not stored as two’s complement. Instead the sign of the number is stored separately. All mathematical operations on numbers in this range can be carried out in the same way as on regular machine integers. For larger numbers, multiple 30 bit digits are needed. Mathamatical operations on these large integers operate digit by digit. In this case, the unused bits in each digit come in handy as carry values.

Integers in JavaScript

Compared to most other high level languages JavaScript stands out in how it deals with integers. At a low level, JavaScript does not store integers at all. Instead, it stores all numbers in floating point format. I will discuss the details of the floating point format in a future post. When using a number in an integer context, JavaScript allows exact integer representation of a number up to 53 bit integer. Any integer larger than 53 bits will suffer from rounding errors because of its internal representation.

const a = 25; const b = a / 2;

In this example, a will have a value of 25. Unlike C++, JavaScript does not perform integer divisions. This means the value stored in b will be 12.5.

JavaScript allows bitwise operations only on 32 bit integers. When a bitwise operation is performed on a number JavaScript first converts the floating point number to a 32 bit signed integer using two’s complement. The result of the operation is subsequently converted back to a floating point format before being stored.